You should always remember, user's input is always malicious. That's an assumption you always need to keep in mind when developing stuff that accepts data from a form in any site of your web project. Thanks to a great article from the matttutt website, I discovered a horrible thing that is happening in Our Code World about how to deal with indexed spam or injected SEO spam content.

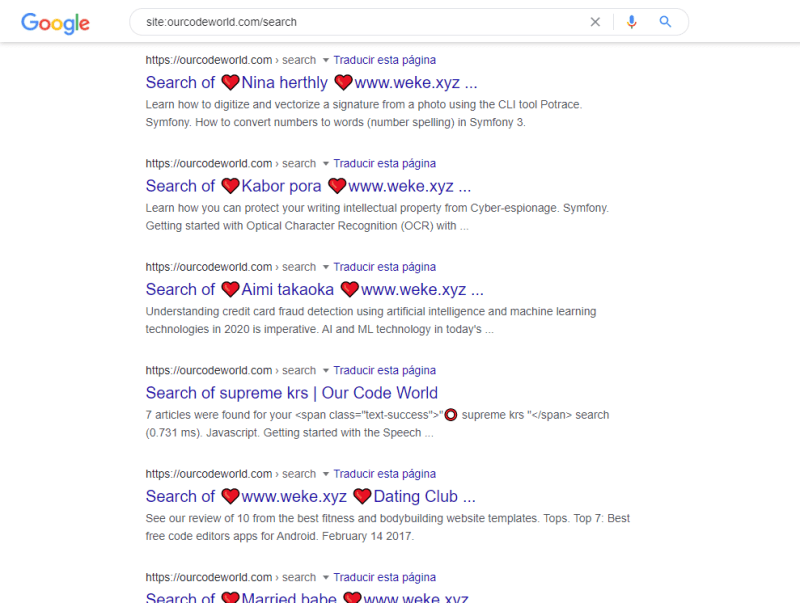

After reading and understanding this spam method, I decided to check if Our Code World was being a victim of the injected SEO spam content, for my surprise, the search module was the predilect page for this trick:

As you can see, the search page with the spam search indexed about 29,400 registers in Google. The search terms are awful, all of them are related to adult content which is of course not the niche of my blog.

How did this happen?

The search module on the blog allows users to filter by some keywords the content of Our Code World. When someone types something and searchs, the URL https://ourcodeworld.com/search?q=some%20text is generated, so every new search will be theoretically a new page that Google may index. Remember this as it's important, every page with new get parameters, if handled inappropriately will always count as a new page on your website. As I use Google Analytics, so if someone decided to spam such content, Google would end up indexing the page pitifully.

Google probably doesn't want to rank your blog’s internal search results pages, and you’re wasting a lot of Googlebot's energy, if you will, on pages that may not do your blog much good. However, they were indexed anyway and therefore they're harming you someway.

Which are the consequences?

As shown in the image, they insert keywords on the blog, like drugs-related stuff and adult content. This is referred to as the black-hat SEO techniques also known as pharma hack.

Solution in my case

Fortunately, in my case, I don't need the /search URLs of the blog to be indexed on Google, as the search it's supposed to be personal. The first thing to do is to prevent such page from being indexed using the following metatags on the page:

<meta name="robots" content="noindex">

<meta name="googlebot" content="noindex">

<meta name="googlebot-news" content="nosnippet">And as well, add the X-Robots-Tag header to the response of the search page to prevent the indexation:

X-Robots-Tag: noindexIn some cases, you will need to index the search page, however without the query string. You may allow the indexation and prevent this problem of the injected SEO spam content by using a canonical URL in your markup:

<link rel="canonical" href="https://ourcodeworld.com/search" />Cleaning the mess

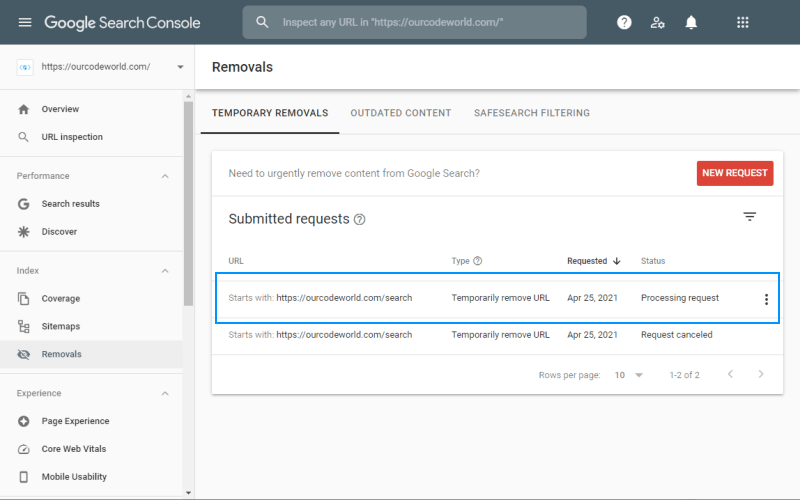

Now, the only thing that's left is to remove all the injected SEO spam content that is already on Google under my domain name. I use Google Webmasters Console that fortunately allows me to remove such indexed content from the web under the Removals area:

In this case, submitting a request to remove all the URLs with the prefix https://ourcodeworld.com/search should remove all the spammed injected SEO on my blog.

Happy coding ❤️!