In the era of big data, efficiently managing and persisting vast amounts of information is a challenge that plenty of organizations face. Handling hundreds of thousands if not millions of records daily, the need for robust, scalable, and efficient data persistence strategies has never been more critical, especially when working with legacy frameworks like Symfony 1.4. In this article, I will explain to you how to persist data at scale in Symfony 1.4 using Doctrine 1, whether you are maintaining a legacy project or looking to improve performance in existing projects.

Handling memory leaks by disabling the debugging mode

Depending on the environment where you're running your code (a controller or a task), you will need to disable the debugging tool because otherwise, Symfony will log the database queries executed in your code and your script will run out of memory sooner or later.

There are a couple of options to disable the debugging depending on where you are at as I mentioned before.

Within a controller

Within a controller is as easy as disabling the sf_debug using sfConfig:

<?php

class sandboxActions extends sfActions

{

public function executeExample(sfWebRequest $request)

{

sfConfig::set('sf_web_debug', false);

// Rest of your code ...

}

}Within a Task

An option is to disable the debugging when running the code with PHP through the command line (Disable Doctrine profiler on CLI environment). In your project configuration, disable the Symfony debug using sfConfig::set:

<?php

// config/ProjectConfiguration.class.php

class ProjectConfiguration extends sfProjectConfiguration

{

public function setup()

{

/// ... //

// When Symfony is run from the command line, disable debug mode

if (0 == strncasecmp(PHP_SAPI, 'cli', 3) || sfConfig::get('sf_environment') == 'cli'){

sfConfig::set('sf_debug', false);

}

// ... //

}

}

The other option is to disable the database profiler in your databases.yml file setting the profiler option to false (make the modification, clear the cache and then run your code):

# You can find more information about this file on the symfony website:

# http://www.symfony-project.org/reference/1_4/en/07-Databases

all:

doctrine:

class: sfDoctrineDatabase

param:

dsn: mysql:host=localhost;dbname=databasename

username: root

password: MYSQL_ROOT_PASSWORD

profiler: falseNow that the debugging has been disabled, you can proceed with the rest of the tutorial.

Persisting hundreds of thousands of entities

The following code is designed to persist a large number of records into a database in an efficient way using the Doctrine ORM (Object-Relational Mapping), using no more than 15MB of RAM during the execution (considering that the Note entity contains only 3 columns). In this code we:

- Disable the Symfony web debug toolbar. It's very important in scripts that execute many database queries, as the toolbar could otherwise use a lot of memory by storing information about each of the queries.

- Then we initialize the variables that will store the total amount of records that we are going to create, along with the chunk size, which is the number of records to be handled per database transaction.

- We need to enable the automatic freeing of query objects to help manage memory usage.

- Finally, within each transaction, a nested for loop creates and persists individual Note objects in the database.

In this case, the code is executed in a controller, but it can be used as well inside a Task (CLI command):

<?php

class sandboxActions extends sfActions

{

public function executeExample(sfWebRequest $request)

{

// Important: Disable web debug toolbar, otherwise each query will be stored in memory

// and will cause a memory leak and eventually an out of memory error

sfConfig::set('sf_web_debug', false);

// For this example we are going to persist 20,000 records in the notes table

// Total notes to create

$total = 20000;

// Chunk size to use

// This is the number of records to persist in each transaction

$chunkSize = 500;

// Get current database connection

$conn = Doctrine_Manager::getInstance()->getCurrentConnection();

// Enable automatic query object freeing

$conn->setAttribute(Doctrine_Core::ATTR_AUTO_FREE_QUERY_OBJECTS, true);

// Iterate over the total number of records to create, creating transactions of 500 records

// p. ej. 0-499, 500-999, 1000-1499, ... 19500-19999

for($i = 0; $i < $total; $i += $chunkSize)

{

// Begin current transaction

$conn->beginTransaction();

// Iterate over the chunk size

// p. ej. 0, 1, 2, 3, 4, ... 499

for($j = $i; $j < $i + $chunkSize; $j++)

{

$note = new Note();

$note

->setTitle('Note ' . $j)

->setContent('Content ' . $j)

->setDate(date('Y-m-d'))

;

$note->save();

}

// Commit current transaction

$conn->commit();

// Clean up

$conn->clear();

}

// Finally, close the connection

$conn->close();

}

}After each chunk of records is committed, we clean up any lingering objects and free memory on demand. Once all the records are inserted in the database, we close the connection to the database and that's it! You've just found a pretty simple way to persist huge amounts of data with a couple of lines of code.

You can also use the following function to obtain the amount of memory used in the script:

<?php

/**

* Get the memory usage of the current script.

*

* @return string

*/

function getUsedMemory()

{

$mem_usage = memory_get_usage(true);

if ($mem_usage < 1024)

return $mem_usage . " Bytes";

elseif ($mem_usage < 1048576)

return round($mem_usage / 1024, 2) . " KB";

else

return round($mem_usage / 1048576, 2) . " MB";

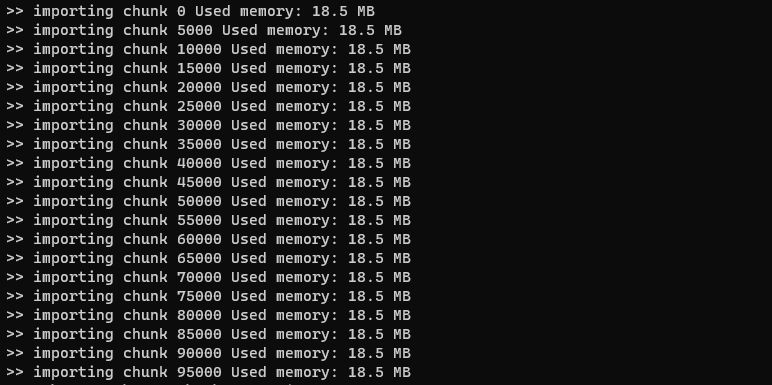

}So you can debug how much memory is it necessary to persist a specific amount of records persisting the data from chunk to chunk (for example, persisting 100K Notes in 5k chunks using only ~20MB of RAM):

This implementation is still kinda slow ... can we make it better?

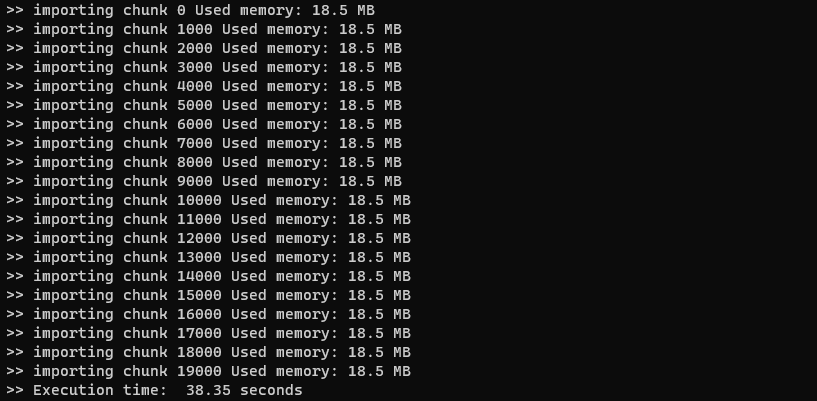

You can't deny that using Doctrine entities makes your code pretty readable and more object-oriented, the previous implementation does a great job while using the minimal amount of RAM necessary to keep the script alive while inserting the data. But, if you want to persist 100K FASTER, then persisting using entities is not the way to go. For example, let's persist 20K records using our first implementation with Doctrine entities counting the seconds it took to complete:

With Doctrine entities, persisting 20K records took about ~40 seconds to complete. Now let's write the same code using another approach with prepared statements. Prepared statements are a feature used in database management systems to execute similar database statements repeatedly with high efficiency and security:

<?php

class sandboxActions extends sfActions

{

public function executeExample(sfWebRequest $request)

{

// Important: Disable web debug toolbar, otherwise each query will be stored in memory

// and will cause a memory leak and eventually an out of memory error

sfConfig::set('sf_web_debug', false);

$config = ProjectConfiguration::getApplicationConfiguration('frontend', 'prod', true);

sfContext::createInstance($config);

$conn = Doctrine_Manager::getInstance()->getCurrentConnection();

$conn->setAttribute(Doctrine_Core::ATTR_AUTO_FREE_QUERY_OBJECTS, true);

$sql = "INSERT INTO note (title, content, date) VALUES (:title, :content, :date)";

$stmt = $conn->prepare($sql);

// Persist 20k records in chunks of 1k

$total = 20000;

$chunkSize = 1000;

for($i = 0; $i < $total; $i += $chunkSize)

{

$conn->beginTransaction();

for($j = $i; $j < $i + $chunkSize; $j++)

{

$stmt->bindValue(':title', 'Title ' . $j);

$stmt->bindValue(':content', 'Content ' . $j);

$stmt->bindValue(':date', date('Y-m-d H:i:s'));

$stmt->execute();

}

$conn->commit();

$conn->clear();

}

$conn->close();

}

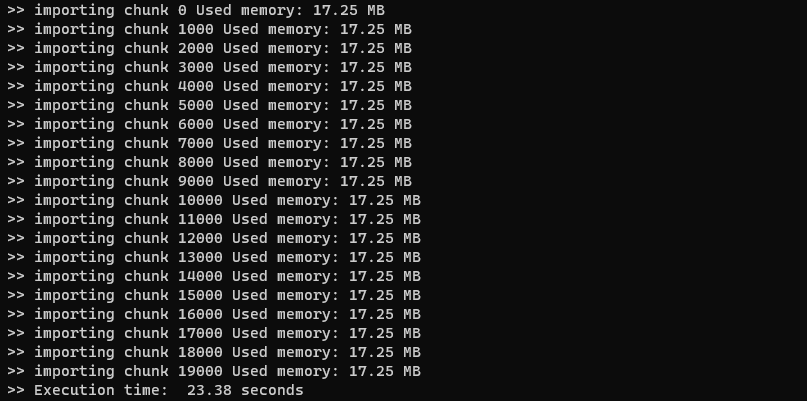

}If we execute the code and log the time it took to complete:

You will be surprised (or maybe not) that persisting 20K records using prepared statements took almost half of the time compared to persisting records using doctrine entities. Persisting entities in Doctrine is way slower than prepared statements for several reasons. Prepared statements are parsed and compiled only once, reducing significantly the parsing overhead on the database side, especially when running complex queries multiple times, which is the opposite when persisting entities through Doctrine which involves generating and parsing different SQL queries, especially if the ORM layer dynamically constructs queries based on different entity states.

Prepared statements are highly efficient for batch operations due to their nature of reusing the same query structure with different parameters. When persisting entities in bulk, Doctrine might not be able to optimize this process as effectively, leading to multiple individual queries being sent to the database, which can be slower as we saw in the example. You can play with the amount of data that you persist depending on your server's database throughput, the size of the records that you're persisting, and the number of chunks to optimize it to your logic.

Happy coding ❤️!