In healthcare AI, a few terms are repeated casually and misunderstood as human-in-the-loop. It is often presented as reassurance. A safety net. A checkbox that signals responsibility.

Yet in practice, most systems that claim to include human oversight quietly remove it.

The uncomfortable truth is this:

If human-in-the-loop is added after a model is deployed, accountability is already lost.

Across healthcare deployments, the pattern is consistent. A model is trained, validated, and integrated into workflows. Only then is a “review step” introduced. A clinician signs off. A dashboard shows predictions. A policy document states that humans retain final authority.

But design tells a different story.

Once a model is operational, its outputs begin to dominate decision-making, not because clinicians are careless, but because the system is engineered to make the model appear authoritative. This is not a behavioral failure. It is an architectural one.

Oversight Added Later Does Not Restore Accountability

In theory, requiring a human to review an AI output should preserve responsibility. In reality, post-deployment review rarely functions as true oversight. By the time a prediction reaches a clinician, the model has already shaped the frame of the decision. Risk scores arrive with confidence. Flags appear with urgency. Interfaces prioritize algorithmic signals over human context.

The human, in practice, becomes a validator rather than a decision-maker.

Research and field experience show that when AI outputs are presented without equal explanatory weight, humans defer even when they disagree. The system trains the user. Over time, the act of review becomes procedural rather than cognitive. This dynamic is central to the argument Ali Altaf makes in The Moment Healthcare AI Gets Questioned, where he writes that once authority is implicitly granted to the model through design, human oversight becomes symbolic rather than substantive.

This is why adding a review step after deployment does not reintroduce accountability. The authority has already shifted. As Altaf argues, accountability cannot be patched into a system through policy. It must be embedded through design. If the architecture privileges the model, no amount of governance language will correct the imbalance.

Human-in-the-Loop Is an Architectural Decision

True human-in-the-loop design begins before a model ever touches production.

It requires rejecting the idea that humans exist to approve AI outputs. Instead, the system must be built so that AI exists to support human reasoning.

This distinction changes everything.

A genuinely human-in-the-loop system enforces three conditions by design, not convention.

First, the review must be non-bypassable.

If a prediction can influence downstream action without explicit human engagement, the loop is broken. Time pressure, automation shortcuts, or silent defaults all erode accountability.

Second, human reasoning must be required, not optional.

The system should not allow passive confirmation. It should demand interpretation. Why does the model think this? Which signals drove the outcome? Where does this align or conflict with clinical judgment?

Third, the interface must give equal cognitive weight to the human and the model.

This is the most overlooked requirement. When probability scores are bold and explanations are secondary, authority tilts toward the machine. When explanations are absent or superficial, humans are positioned as rubber stamps.

Design communicates power. If the design favors the model, the model leads.

How Poor Design Quietly Transfers Authority to the Model

Many healthcare teams believe they have preserved human oversight because a person is technically involved in the workflow. Yet subtle design choices undermine that belief.

A single risk number presented without context appears precise even when uncertainty is high. Color coding can imply urgency that exceeds clinical relevance. Auto-generated recommendations, even when labeled as assistive, shape behavior.

Over time, these signals condition users to trust the system more than their own reasoning.

This is not malicious. It is predictable.

Humans defer to systems that appear confident, consistent, and mathematically grounded. Without explainability that exposes uncertainty and trade-offs, the AI becomes the silent authority.

The result is a system where responsibility is assigned to humans on paper, but exercised by the model in practice.

Designing for Preserved Authority

The alternative is not to slow innovation or reject AI. It is designed differently.

At Paklogics, this principle has guided how complex AI systems are structured from the ground up. Human-in-the-loop is treated not as a compliance feature, but as a system constraint.

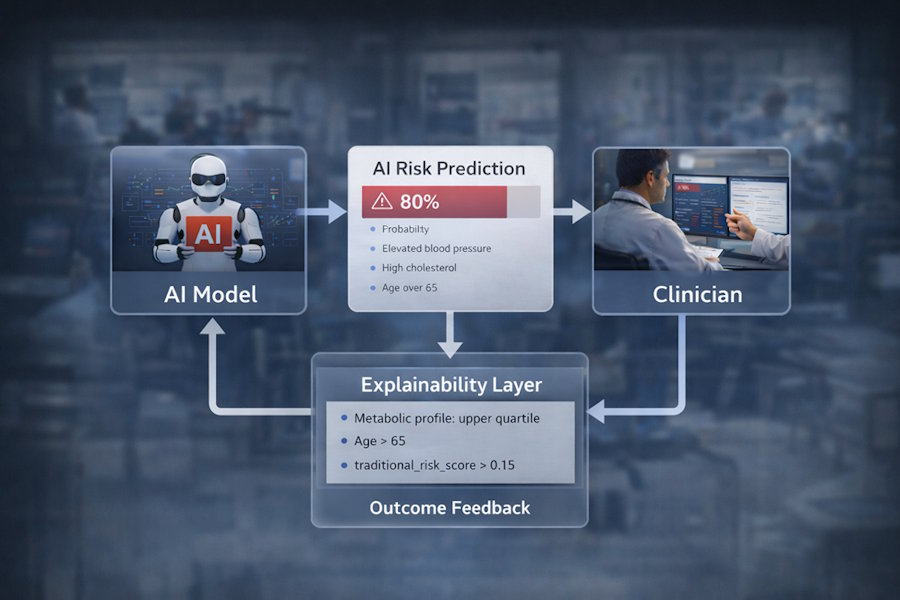

This philosophy is evident in EthoX.AI’s architecture. The platform does not merely generate predictions. It forces interaction with explanations. It surfaces feature-level reasoning alongside outcomes. It prevents silent acceptance. This approach closely mirrors the position articulated by Ali Altaf, who has repeatedly argued that explainability without enforced human reasoning is a false safeguard, and that authority in healthcare AI must be protected through system design rather than assumed through professional roles.

In the cardiovascular risk case study, the value was not the probability score itself. It was the ability for clinicians, analysts, and governance teams to interrogate why a patient was flagged, how features contributed, and whether those signals aligned with clinical logic.

The system was intentionally designed for review, not consumption.

This reflects a broader position articulated in Altaf’s work: explainability is not a reporting layer. It is infrastructure. Without it, human oversight becomes performative.

Human-in-the-Loop as a Line You Cannot Cross Later

Healthcare AI often fails not because models are inaccurate, but because authority is misallocated. When humans are positioned as validators instead of decision-makers, accountability dissolves.

The critical insight for leaders, designers, and engineers is this:

If human-in-the-loop is not designed into the system from day one, it cannot be recovered later.

No policy update can rebalance authority once workflows, interfaces, and habits have formed around the model. By then, the system has already decided who leads.

Human-in-the-loop is not a feature. It is a design stance. And in healthcare, it is the difference between assistance and abdication.

If that stance is not taken at the architectural level, the system has already failed before the first patient is ever assessed